RDM Weekly - Issue 009

A weekly roundup of Research Data Management resources.

Welcome to Issue 9 of the RDM Weekly Newsletter!

The content of this newsletter is divided into 3 categories:

☑️ What’s New in RDM?

These are resources that have come out within the last year or so

☑️ Oldies but Goodies

These are resources that came out over a year ago but continue to be excellent ones to refer to as needed

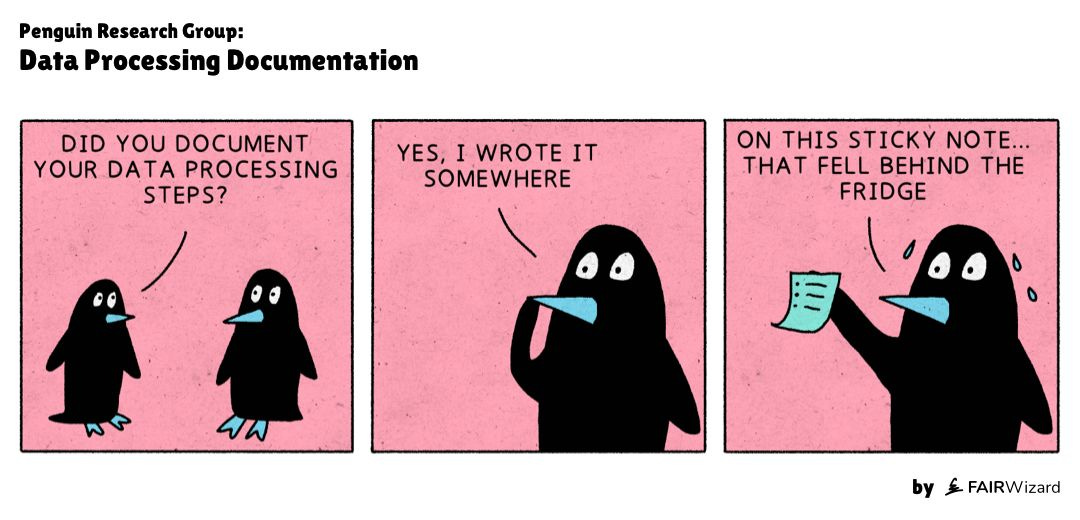

☑️ Just for Fun

A data management meme or other funny data management content

What’s New in RDM?

Resources from the past year

1. 10 Lessons from “Tidy Data” on its 10th Anniversary

Last fall was the 10 year anniversary of “Tidy Data” by Hadley Wickham, published in the Journal of Statistical Software. In honor of this auspicious work, Sam Tyner-Monroe wrote a brief summary of the 10 most valuable lessons she’s learned from “Tidy Data.”

2. What is Data Stewardship and Why is It Important?

In today’s digital world, data has become one of the most valuable resources for organizations. From businesses and governments to researchers and nonprofits, data drives decision-making, innovation, and growth. However, with the increasing reliance on data comes the critical need to manage it effectively and responsibly. This is where data stewardship comes into play. But what exactly is data stewardship, and why is it so important? This article answers these questions.

3. Data Lumos Repository

ICPSR is committed to ensuring that valuable data resources remain accessible and easy to locate. Therefore, they established an archive within openICPSR called DataLumos where researchers can deposit data that they believe may be at risk or hard to find in the future. DataLumos accepts deposits of public data resources from the community and recommendations of public data resources that ICPSR itself might add to DataLumos.

4. Data Management and Sharing Plans (DMS) Lesson Set

This set of focused training materials (“microtrainings”) is designed to equip learners with strategies for developing NIH DMS (Data Management and Sharing) Plans that reflect FAIR (findable, accessible, interoperable, reusable) data management and sharing best practices. They are intended to describe community-developed best practices for all stakeholders who create or work with DMS Plans. These materials (documents and links to recordings) will help stakeholders develop plans that optimize the findability, accessibility, interoperability, and reusability of shared scientific data.

5. Ethically Making & Sharing Data

This zine is based on the "Ethically Making & Sharing Data" workshop offered at Penn Libraries by Cynthia Heider. It summarizes some of the ethical challenges researchers face when choosing to work with data and provides actionable steps for a more ethical data practice at each phase of the data lifecycle.

6. Creating and Validating Standardized R Project Structures that are Psych-DS Compliant-ish

Making psychological code and data FAIR is hard, in part because different projects organize their code and data very differently. Sometimes this is for good reasons, such as due to the demands of a given project, study design, or the types of data it uses. Other times, this is for poorer reasons, like people not having spent much time thinking about digital project management or received any training in it. The {psychdsish} R package creates a standardized project skeleton that is compliant-ish with the psych-DS data standard and also adds several features to improve reproducibility, such as Quarto templates, a readme template, CC BY licence, and a .gitignore with reasonable defaults. It also has a validator function that lets users check that their project is still compliant with the standard and, if not, tells them how to rectify it.

Oldies but Goodies

Older resources that are still helpful

1. Best Laid Plans: A Guide to Reporting Preregistration Deviations

Psychological scientists are increasingly using preregistration as a tool to increase the credibility of research findings. Many of the benefits of preregistration rest on the assumption that preregistered plans are followed perfectly. However, research suggests that this is the exception rather than the norm, and there are many reasons why researchers may deviate from their preregistered plans. Preregistration can still be a valuable tool, even in the presence of deviations, as long as those deviations are well documented and transparently reported. While the focus of this article is on providing a framework for transparent and standardized reporting of deviations from preregistrations, Figures 2 and 3 also remind us that while some deviation reasons may be unavoidable, many of these can potentially be prevented through good data management planning.

2. Measuring Data Rot: An Analysis of the Continued Availability of Shared Data from a Single University

The implementation of data sharing mandates by funding agencies and journals within the past decade has led to an increase of open research data available for download and use. As data sharing mandates become established and data repositories flourish, questions remain about how stable this data actually is and how its availability may depend on how and where the data is shared. Content on the internet can disappear for a number of reasons, including: link rot (i.e. dead links); content drift (i.e. links that no longer point to the original content); failure to update permanent identifiers (i.e. DOIs that no longer resolve); etc. To determine where data is shared and what data is no longer available, this study analyzed data shared by researchers at a single university and based on the findings, the author provides best practice guidance to share data in a data repository using a permanent identifier.

3. Yoda Metadata Editor

Yoda is a system for reliable, long-term storing and archiving of large amounts of research data during all stages of a study, developed by Utrecht University. However, the Yoda metadata editor is open to anyone who wants or needs a graphical user interface to fill in metadata for their own data package. The metadata can then be downloaded to a JSON file which can be added to a data package. The fields of the Yoda metadata editor are based on the DataCite metadata schema.

4. Code and Data for the Social Sciences: A Practitioner’s Guide

This guide, now over 10 years old, is still very much relevant today. This handbook is about translating insights from experts in code and data into practical terms for empirical social scientists. In this guide the authors acknowledge that often, when we are solving problems with code and data, we are solving problems that have been solved before, better, and on a larger scale. Drawing on their own experiences and what they’ve learned from experts in fields such as computer science, the authors provide solid advice for more efficiently working with code and data, with examples provided for those who work in Stata.

Just for Fun

Thank you for checking out the RDM Weekly Newsletter! If you enjoy this content, please like, comment, or share this post! You can also support this work through Buy Me A Coffee.